Clearpath Robotics was founded six years ago by three college friends who share a passion for making stuff. 80 specialists from the company are testing rough-terrain robots like the Husky, a four-wheeled robot used by the US Department of Defense.

Husky

They also make drones and even built the Kingfisher robotic boat. However, they will never build one thing for sure: a robot that can kill.

Clearpath is the first and so far the only robot company to pledge not to build killer robots. The decision was made last year by co-founder and CTO Ryan Garipay, and in fact even brought in experts who liked Clearpath's unique ethical position.

The ethics of robot companies have recently come to the fore. You see, we are with one foot in a future where killer robots will exist. And we are not yet completely ready for them.

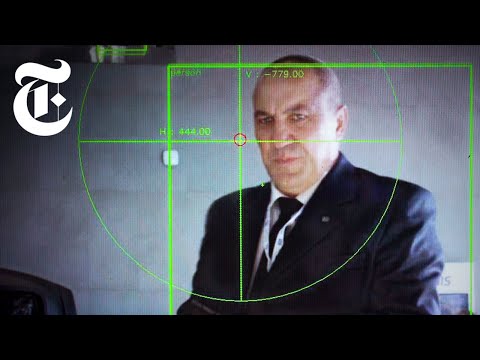

Of course, there is still a long way to go. Korea's Dodam Systems, for example, is building an autonomous robotic turret called the Super aEgis II. It uses thermal imaging cameras and laser rangefinders to identify and attack targets up to 3 kilometers away. The United States is also reportedly experimenting with autonomous missile systems.

Promotional video:

Two steps away from the "terminators"

Military drones like the Predator are currently human-operated, but Garipai says they will be fully automatic and autonomous very soon. And this worries him. Very. “Lethal autonomous weapons systems can roll off the assembly line now. But deadly weapons systems, which will be made in accordance with ethical standards, are not even in the plans."

For Garipay, the problem lies in international rights. There are always situations in war in which the use of force seems necessary, but it can also endanger innocent bystanders. How do you create killer robots that will make the right decisions in any situation? How can we determine for ourselves what the right decision should be?

We already see similar problems with the example of autonomous transport. Let's say a dog runs across the road. Should the robot car swerve to avoid hitting the dog but put its passengers at risk? What if it's not a dog, but a child? Or a bus? Now imagine a war zone.

“We can't agree on how to write a manual for such a car,” says Garipay. "And now we also want to move on to a system that must independently decide whether to use lethal force or not."

Do cool stuff, not weapons

Peter Asaro has spent the past several years lobbying for a ban on killer robots in the international community, as the founder of the International Committee for the Control of Robotic Armies. He believes that the time has come for "a clear international ban on their development and use." It will allow companies like Clearpath to keep doing cool things, he said, "without worrying that their products could be used to violate human rights and threaten civilians."

Autonomous missiles are of interest to the military because they solve a tactical problem. When remote-controlled drones, for example, are operating in combat conditions, it is not uncommon for the enemy to jam the sensors or network connection so that the human operator cannot see what is happening or control the drone.

Garipay says that instead of developing missiles or drones that can decide on their own which target to attack, the military needs to spend money on improving sensors and jamming technology.

“Why don't we take the investment that people would like to make to build autonomous killer robots and invest it in making existing technology more efficient? he says. "If we set a challenge and overcome this barrier, we can make this technology work for the benefit of people, not just the military."

Recently, there has also been more talk about the dangers of artificial intelligence. Elon Musk worries that an out-of-control AI could destroy life as we know it. Musk donated $ 10 million last month to research in artificial intelligence.

One of the big questions about how AI will affect our world is how it will merge with robotics. Some, like Baidu researcher Andrew Ng, worry that the coming AI revolution will take people's jobs. Others like Garipay fear that it could take lives.

Garipay hopes his colleagues, scientists and machine builders will give thought to what they are doing. That's why Clearpath Robotics took the side of the people. "While we as a company cannot bet $ 10 million on it, we can bet our reputation."