Five seasons of artificial intelligence. He beats a person to smithereens in Go, takes control of his car and replaces him at work, and at the same time can improve the effectiveness of medicine. Its long history dates back to 1958 with a huge machine that could distinguish between right and left.

1: 0. Then 2: 0. And 3: 0. In March 2016, the final meeting was held at the Four Seasons Hotel in Seoul, after which there was no shadow of a doubt: the Korean go champion Lee Sedol lost 4: 1 to a computer running the AlphaGo program developed by a subsidiary of Google "Deepmind". For the first time in history, the mechanism of "machine learning" and "artificial neural networks" completely surpassed the human brain in this game, which is considered to be more difficult to simulate than chess. Many experts emphasize that they expected such a result only in a few years.

To a wider audience, this was proof of the power of the new "deep learning" technology, which is now at the heart of voice assistants, autonomous cars, facial recognition, machine translation, and also facilitates medical diagnosis …

The interest in automatic learning technologies, which is shown by American and Chinese corporations in the field of high technologies (Google, Amazon, Facebook, Microsoft, Baidu, Tensent), covers the entire planet and more and more often jumps from scientific headings of newspapers in economic, analytical and social materials. The fact is that artificial intelligence not only promises big changes in the economy, but also raises thoughts about new destructive weapons, general surveillance of citizens, replacing employees with robots, ethical problems …

But where did the AI technological revolution come from? Her story has enough ups and downs. She relied on the achievements of neuroscience and computer science (as you might guess from the name), as well as, surprisingly, physics. Her path went through France, the USA, Japan, Switzerland and the USSR. In this area, various scientific schools collided with each other. They won one day and lost the next. It took everyone to show patience, persistence and willingness to take risks. There are two winters and three springs in this story.

Self-aware machine

It all started just fine. "The American Army spoke about the idea of a machine that can walk, talk, see, write, reproduce and become aware of itself," wrote The New York Times on July 8, 1958. This one-column article describes the Perceptron, which was created by the American psychologist Frank Rosenblatt in the laboratories of Cornell University. This $ 2 million machine at the time was about the size of two or three refrigerators and was braided with many wires. During a demonstration in front of the American press, the Perceptron determined whether a square drawn on a sheet was on the right or left. The scientist promised that with an investment of another 100 thousand dollars, his machine will be able to read and write in a year. In fact, this took over 30 years …

Promotional video:

Be that as it may, the main thing in this project was the source of inspiration, which remained unchanged right up to AlphaGo and its "relatives". Psychologist Frank Rosenblatt has been involved in the concepts of cybernetics and artificial intelligence for over a decade. By the way, he developed his Perceptron with the help of two other North American psychologists: Warren McCulloch and Donald Hebb. The first published in 1943 a joint article with Walter Pitts (Walter Pitts) with a proposal to create "artificial" neurons, which should start from natural and have mathematical properties. The second introduced rules in 1949 to allow artificial neurons to learn by trial and error, like the brain does.

The bridge between biology and mathematics was a bold initiative. A counting unit (neuron) can be active (1) or inactive (0) depending on the stimuli from other artificial formations with which it is connected, forming a complex and dynamic network. More precisely, each neuron receives a certain set of symbols and compares it with a certain threshold. If the threshold is exceeded, the value is 1, otherwise it is 0. The authors have shown that their associated system can perform logical operations such as “and” and “or” … and thus carry out any calculation. In theory.

This innovative approach to calculations led to the first quarrel in our history. The two concepts came together in an irreconcilable confrontation that continues to this day. On the one hand, there are supporters of neural networks, and on the other, there are advocates of "classic" computers. The latter are based on three principles: calculations are predominantly sequential, memory and calculations are provided with clearly defined components, any intermediate value should be equal to 0 or 1. For the former, everything is different: the network provides both memory and calculations, there is no centralized control, and intermediate values are allowed.

The "Perceptron" also has the ability to learn, for example, to recognize a pattern or classify signals. This is how the shooter corrects the sight. If the bullet goes to the right, it moves the barrel to the left. At the level of artificial neurons, this means weakening those pulling to the right, in favor of those pulling to the left, and allowing you to hit the target. All that remains is to create this tangle of neurons and find a way to connect them.

Be that as it may, enthusiasm faded significantly in 1968 with the release of the book Perceptrons by Seymour Papert and Marvin Minsky. In it, they showed that the structure of perceptrons allows you to solve only the simplest problems. It was the first winter of artificial intelligence, whose first spring, we must admit, did not bear much fruit. And the wind blew from no where: Marvin Minsky stood at the origins of the emergence of the very concept of "artificial intelligence" in 1955.

AI and AI collide

On August 31 of that year, she and colleague John McCarthy sent a dozen people inviting them to participate next summer in a two-month work on the then-first concept of artificial intelligence at Dartmouth College. Warren McCulloch and Claude Shannon, the father of computer science and telecommunications theory, were in attendance. It was he who brought Minsky and McCarthy to the Bell laboratory, from which transistors and lasers subsequently came out. In addition, it was they who became one of the centers of the revival of neural networks in the 1980s.

In parallel with this, two new movements were formed, and Stanford University became their battlefield. On the one hand flaunted the acronym for AI, "artificial intelligence", in a different understanding from neural networks, which was defended by John McCarthy (he left the Massachusetts Institute of Technology and created his laboratory at Stanford). On the other hand, there is UI, "enhanced intelligence," reflecting the new approach of Douglas Engelbart. He was hired in 1957 by the Stanford Research Institute (created in 1946 by an independent institution that interacted with the private sector).

Douglas Engelbart had a difficult path behind him. He was a technician and engaged in radar during the Second World War, but then resumed his studies and defended his thesis. Before joining Stanford, he even created his own company, but it lasted only two years. In a new place, he began to implement his vision of enhancing human abilities. He said he had a clear idea of how “colleagues sit in different rooms at similar workstations, which are connected to the same information system, and can closely interact and exchange data,” says sociologist Thierry Bardini.

This vision was put into practice in December 1968, ten years after the introduction of Perceptron, during a demonstration of the oNLine System with a text editor on the screen, hyperlinks to documents, graphs and a mouse. Douglas Engelbart was a visionary, but probably looked too far into the future to really make himself known.

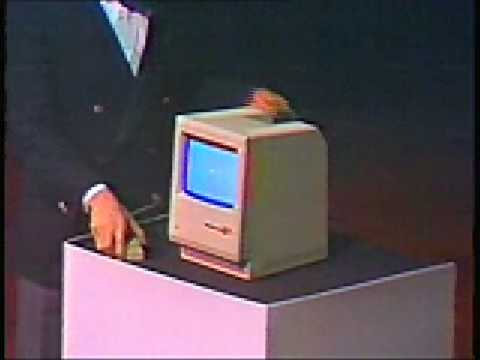

January 1984, the first Macintosh

John McCarthy, in turn, called this system unnecessarily "dictatorial" because it imposed a special approach to text structuring. This brave scientist, who, like Engelbart, was funded by the American army, presented his own, symbolic concept of artificial intelligence. In this he relied on LISP, one of the first programming languages he developed. The idea was to imitate the thought process with a logical chain of rules and symbols and thereby form a thought or at least a cognitive function. This has nothing to do with networks of independent neurons that can learn but are unable to explain their choice. Apart from the robo-hand that poured the punch, which amused everyone by knocking over the glasses, the new approach was quite successful in terms of what has long been called "expert systems."Chains of rules allowed machines to analyze data in a wide variety of fields, be it finance, medicine, manufacturing, translation.

In 1970, a colleague of Minsky made the following statement to Life magazine: “In eight years we will have a machine with the intelligence of an average person. That is, a machine that can read Shakespeare, change the oil in a car, joke, fight."

The victory of the symbolic approach

Apparently, artificial intelligence doesn't like prophecy. In 1973, a report was released in England that chilled hot heads: “Most scientists who work on artificial intelligence and related fields admit that they are disappointed with what has been achieved over the past 25 years. (…) In none of the camps the discoveries so far made have yielded the promised results."

The following years have confirmed this diagnosis. In the 1980s, AI businesses went bankrupt or changed fields. The McCarthy laboratory building was demolished in 1986.

Douglas Engelbart won. In January 1984, Apple released its first Macintosh, putting most of the engineer's ideas into practice.

Thus, the victory went not to artificial intelligence, which Minsky and McCarthy dreamed of, but to the enhanced intellect of Engelbart. All of this has led to the development of efficient personal computers. And artificial intelligence has reached a dead end. Symbolism turned out to be stronger than neural networks. Nevertheless, our story does not end there, and they will still declare themselves.

David Larousserie