If you don't live on social media, then most likely you don't live at all. Half of the world's population can give you such a disappointing diagnosis. In any case, according to statistics, today are registered in social networks. In Russia, the number of networkers is about 30 million.

Volumes have already been written by psychologists about network addiction, which is compared to a drug. And the author of the term "virtual reality", one of its creators, a representative of the Silicon Valley cyber elite, Jaron Lanier, even wrote a book about why you need to run from the social network. Although it has become a bestseller, these calls are unlikely to convince broad masses of users. Today, the population of social networks is growing rapidly. According to experts, by the middle of the century they will entangle almost the entire population of the planet. Unless, of course, humanity changes its mind.

And there is something to think about. In the networks today there are clubs for the interests of not only philatelists and theatergoers, but suicides and drug addicts, here you can find instructions for novice terrorists, comrades-in-arms to fight the current government and much more, frankly speaking, negative. For example, you can think of the suicide wave, when there was a boom in one popular online game, the final goal of which is suicide. Users of social networks (overwhelmingly teenagers) are contacted by a "curator" who uses fake accounts, they cannot be identified. First, they explain the rules: "do not tell anyone about this game", "always complete tasks, whatever they may be", "for failure to complete the task you are excluded from the game forever and you will face bad consequences."

And politicians are already talking about the phenomenon of networks: they overthrow governments and even elect presidents. The techniques are surprisingly simple. Fakes are thrown into the network about certain outrageous actions of the authorities and calls are made to take to the streets to express outrage. There are many examples known, for example, in Egypt, South Sudan, Myanmar.

How to protect society from the destructive influence of social networks? This is especially true of the younger generation, which constitutes the overwhelming majority in the networks.

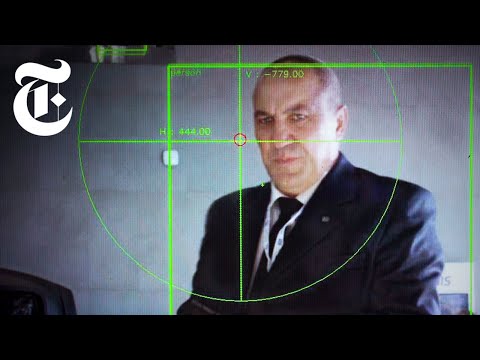

In principle, for specialists, in particular psychologists, this is not a problem. But the volumes of the most diverse information, including negative, in the networks are huge, even the best professionals cannot cope with its "manual" processing. Only technology can do this. And above all, artificial intelligence (AI), built on the basis of neural networks that mimic the structure and functioning of the brain. A team of psychologists from the First St. Petersburg State Medical University named after I. I. Pavlov and specialists in the field of information technology of the St. Petersburg Institute for Informatics and Automation of the Russian Academy of Sciences

“We are talking about identifying with the help of AI different groups of people prone to destructive behavior,” Professor Igor Kotenko, who heads this group of scientists, told the RG correspondent. - Psychologists offered us seven options for such behavior, in particular, this is a negative attitude towards life, which can lead to suicide, this is a tendency to aggression or protests, these are threats against a specific person or group of persons, etc. Our task is to create an AI that can work with these signs, figuratively speaking, "hammer" them into the AI, so that it can constantly analyze the huge information that walks on the network, catch various disturbing options.

At first glance, the task is not very difficult. After all, every resident of the social network, as they say, is in plain sight. On his page you can see what he is interested in, what he thinks, with whom he communicates, what actions he takes himself and what he calls others to. Everything is at a glance. Watch and take action. But if everything was so obvious … The fact is that the thoughts and behavior of a person in most cases are far from being so simple and unambiguous as it might seem. In general, the devil is in the details. And where everything is obvious to a specialist, AI may well be mistaken. For example, Canadian scientists taught AI to distinguish between rude jokes or “hate speech” from just offensive ones. The system turned out to be rather imperfect, for example, it omitted many obviously racist statements.

Promotional video:

In short, it all depends on how the AI is taught. And the system learns by examples just like a child learns. After all, they don't explain to him that a cat has such a mustache, such ears, such a tail. They show him an animal many times and say it is a cat. And then he himself repeats this lesson many times, looking at another cat.

Neural networks created by St. Petersburg scientists will study in the same way. They will be presented with various options for destructive behavior of people. Keeping in its "brains" the signs developed by psychologists, the AI will memorize them in order to then catch them in the huge streams of information on social networks. Professor Kotenko emphasizes that the task of AI is to make only a tip for the destructive, and the last word is for the specialists. Only they will be able to say if the situation is really alarming and urgent action must be taken or the alarm is false.

The project was supported by the Russian Foundation for Basic Research.

Yuri Medvedev